Taking website screenshots is an essential part of a Linux user’s routines. We take website screenshots for a number of reasons with the most common one being the need to document a project’s configuration or installation procedure.

Various GUI apps like Flameshot assist Linux users in taking these website screenshots directly gazetted into active projects’ documentation or archived for future reference.

However, we do not always have to use third-party GUI apps to take website screenshots as this article will demonstrate. We will look at various viable command-line tools that can capture website screenshots from the comfort of the Linux terminal environment.

1. Pageres – Capture Website Screenshots from Commandline

The Pageres command line tool is efficient in generating website screenshots. It can create screenshots of more than one website. All we have to do is point it to the targeted web URLs and specify the resolutions we would like associated with the screenshots.

Pageres can be installed on all major Linux distributions via snap. In this case, you need to have snap installed and enabled on your Linux distribution.

The installation command for Pageres on Linux via snap is as follows.

$ sudo snap install pageres

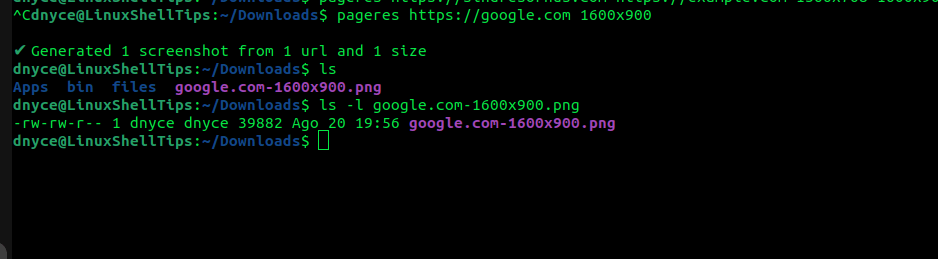

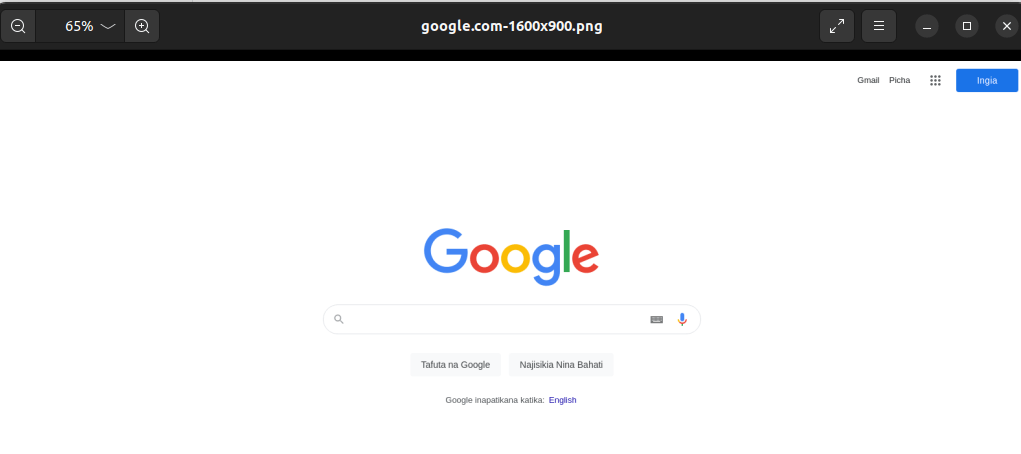

To capture the screenshot of a single website (e.g google.com) with a specified resolution, we would implement the following command.

$ pageres https://google.com 1600x900

Also, the outlook of the generated website screenshot did not disappoint.

To generate screenshots of more than one website using pageres, the command to implement will look like the following:

$ pageres https://ubuntumint.com https://github.com 1366x768 1600x900

Pagers is an exciting tool for taking website screenshots, more on its usage and example implementations can be found on its help page.

$ pageres -h

2. Cutycapt – Capture WebKit’s Rendering of Web Page from Commandline

The CutyCapt command-line tool makes it possible to render web pages via the QtWebkit library. It is also worth noting that the cutycapt web screenshot tool supports a variety of image file formats like SVG, GIF, and JPEG.

It is available for installation on Debian and Ubuntu-based systems via the apt package manager.

$ sudo apt install cutyapt

To take the website screenshot of a site like ubuntumint.com, we would implement the following command.

$ cutycapt --url=ubuntumint.com --out=linuxshelltips.png --min-width=1400 --min-height=400

You will have to adjust the --min-height and --min-width values for the captured website screenshot to have a desirable outlook.

More usage of the cutycapt tool can be found on its help page.

$ cutycapt -h

Also, when the website you are interested in is serving mobile or lite versions of it (which might affect screenshot quality), make use of the --user-agent flag to create screenshots that resemble a modern browser outlook.

[ You might also like: How to Convert Multiple Images to PDF in Linux ]

With these workable solutions, you should be able to effectively capture a website’s screenshot from the comfort of a Linux command-lin environment.

Thanks for your tutorial! I have tested pageres that work very well on an Ubuntu server without a GUI.

However, I can’t get it to work properly with a CRON task. If I am connected through SSH on the server when the task is triggered, it works, but if I am not connected, it does not work.

Do you have any idea why?