The “Too many open files” error in Nginx typically occurs when the system has reached its limit for the maximum number of open file descriptors allowed per process.

This error can manifest in different ways, such as:

- Nginx refusing to start or crashing – When Nginx tries to open log files, configuration files, or sockets but reaches the system’s open file descriptor limit, it cannot proceed and may refuse to start or crash.

- Connection issues – Nginx might fail to handle incoming connections when the limit is reached, resulting in connection errors or slow response times.

The number of file descriptors that a process can open is limited by the operating system for security and resource management reasons. If Nginx or any other process opens too many files and exceeds this limit, it can lead to the mentioned error.

Here are some common reasons why you might encounter this error:

- High traffic/load – If your Nginx server experiences a significant amount of traffic, it might attempt to open a large number of files to handle incoming requests and connections.

- Misconfiguration – Incorrectly configured Nginx settings, particularly those related to logging, can lead to Nginx attempting to open an excessive number of log files, which may exceed the file descriptor limit.

- Too many worker processes – If Nginx is configured with a high number of worker processes, each process will require its own file descriptors, potentially leading to the limit being reached.

- Resource-intensive applications – If you have other resource-intensive applications running on the same server, they may consume file descriptors, leaving fewer available for Nginx.

- Insufficient system settings – The default system limits for file descriptors may be too low for your specific workload. On some systems, these limits can be adjusted.

To resolve the “Too many open files” error, you can take the following steps:

Fixing “Too many open files” Nginx Error

To fix the “Too many open files” error in Nginx, you’ll need to take a systematic approach to identify and address the underlying causes.

1. Increase System File Descriptor Limit

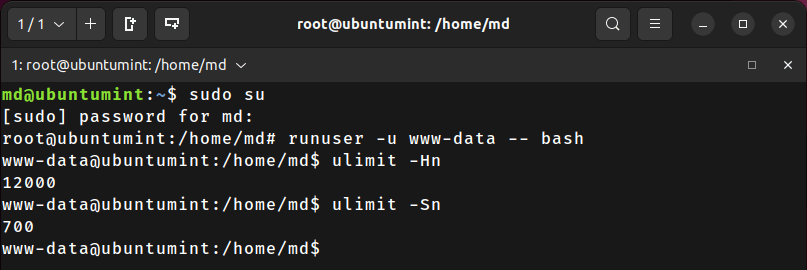

First, check the current file descriptor limit of the Nginx server, you must log in as a root user with “www-data” user privileges.

The “ulimit” command displays the current file limit, making it essential to access it under the “www-data” user context.

$ sudo su # runuser -u www-data -- bash

After it, let’s check the hard and soft limits of open file descriptors allowed for the user’s session.

$ ulimit -Hn $ ulimit -Sn

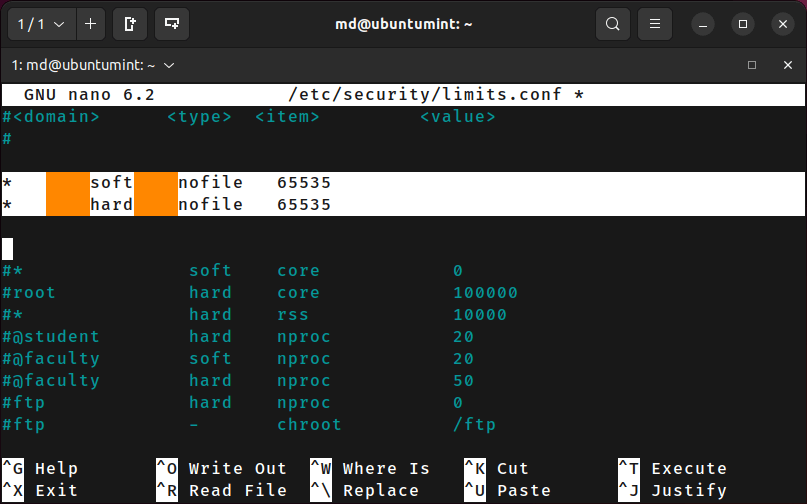

To increase the system-wide file descriptor limit, you may need to modify the “limits.conf” file located in the “/etc/security/” directory by executing the stated command.

$ sudo nano /etc/security/limits.conf

Inside this file, add the following lines and save it:

* soft nofile 65535 * hard nofile 65535

Make sure to replace <new_soft_limit> and <new_hard_limit> with the desired values that align with your system’s resources and requirements.

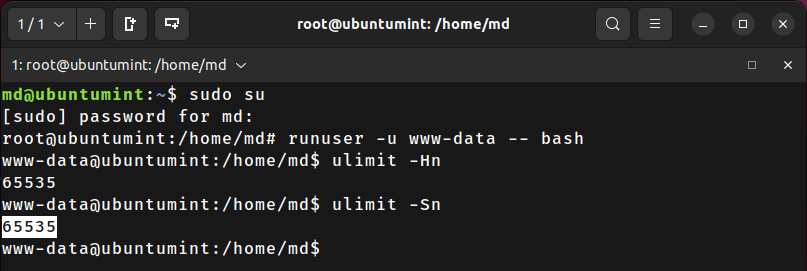

To verify the changes, log in as the “www-data” user and confirm the current file limits of the Nginx server to ensure that it is greater than the previous value set:

$ sudo su # runuser -u www-data -- bash $ ulimit -Hn $ ulimit -Sn

2. Increase System-Wide File Descriptor Limit

Next, you need to increase a “fs.file-max” value, which is a kernel parameter that defines the maximum number of file handles that the system can open simultaneously.

To permanently increase the fs.file-max value, you need to configure /etc/sysctl.conf file as shown.

$ sudo nano /etc/sysctl.conf

Now add or modify the “fs.file-max” value as per your requirement and system resources.

fs.file-max=70000

Save the changes and apply the new configuration without rebooting, run.

$ sudo sysctl -p

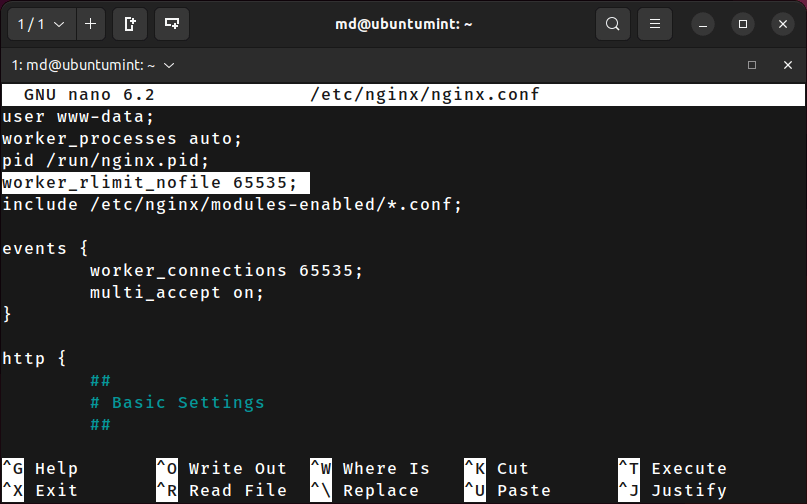

3. Increase worker_rlimit_nofile on Nginx

The “worker_rlimit_nofile” is a configuration directive in NGINX that sets the maximum number of file descriptors (also known as file handles) that can be opened by each worker process.

To increase the worker_rlimit_nofile value, you’ll need to make changes to your Nginx configuration file.

$ sudo nano /etc/nginx/nginx.conf

Find the worker_rlimit_nofile directive inside the http block of your Nginx configuration file, if it doesn’t exist, you can add it under the http block.

worker_rlimit_nofile 65535;

Adjust the value according to your server’s requirements and the maximum number of file descriptors your system can handle.

After making all the changes, restart the NGINX service to apply the new limits by utilizing the given command:

$ sudo systemctl restart nginx

This will ensure that the changes made to the file limits have been applied successfully and that Nginx can now handle more file descriptors, preventing the “Too many open files” error.

If you want to do any other configurations of NGINX, you can follow these related articles:

- How to Fix NGINX “worker connections are not enough” Error

- How to Disable Timeouts in Nginx

- How to Enable or Disable IPv6 in NGINX

- How to Enable Directory Restrictions in Nginx

- How to Block Image Hotlinking in Nginx

- How to Block User-Agents in Nginx

- How to Password Protect Directory in Nginx

Conclusion

Fixing the “Too many open files” Nginx error is essential for maintaining a smoothly running web server.

By carefully adjusting the system-wide limits, and optimizing NGINX process limits, you can significantly boost your server’s ability to handle numerous concurrent connections and file operations.

If you encounter any issues, you can adjust the limits accordingly based on your server’s capacity and requirements.