If your Linux usage experience has led/exposed you to high-load Linux server environments, then there is a high chance you’ve crossed paths with the infamous “too many open files” error.

This Linux OS error simply implies that too many files (file descriptors) have been opened by a process and therefore no more files can be opened because the maximum open file limit has been met. Each system user or process in a Linux environment is assigned an open file limit value which is rather small.

This article will investigate the cause and cure of the “too many open files” error that is prominent in Linux operating system environments.

Understanding Linux File Descriptors

The entire hierarchy of a Linux operating system is file-attributed (be it the presentation of partitioned disk drives, network sockets, or regular/normal data). In Linux, once a file is open, it is identified by a non-negative integer called a file descriptor.

Open file descriptors are tabulated and linked to the process responsible for their existence. Whenever a new file is open, it is immediately appended as a new entry on the process’s table of open file descriptors.

For instance, consider the basic usage of the Linux cat command to open a simple text file. The open() system call is used to pass the filename as an argument before a file descriptor is assigned to it.

The cat command then takes advantage of the assigned file descriptor to interact with the file (display its content). When a user is done previewing the file, the close() system call is used to finally close the file.

At any given file operation instance, three file descriptors (all of them being open by default), are linked to a single file process. The three file descriptors are associated by their own unique notations and are as follows:

- 0 for stdin

- 1 for stdout

- 2 for stderr

Examining Linux Open File Descriptors

Under Linux file management, it is possible to associate various system agents and their assigned file descriptors (total number of file descriptors) to transparently account for used system resources.

Global Usage of File Descriptors

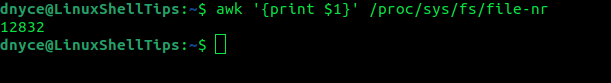

We can check the /proc/sys/fs/file-nr file’s first field with the following awk one-liner command:

$ awk '{print $1}' /proc/sys/fs/file-nr

12832

Per-Process Usage of File Descriptors

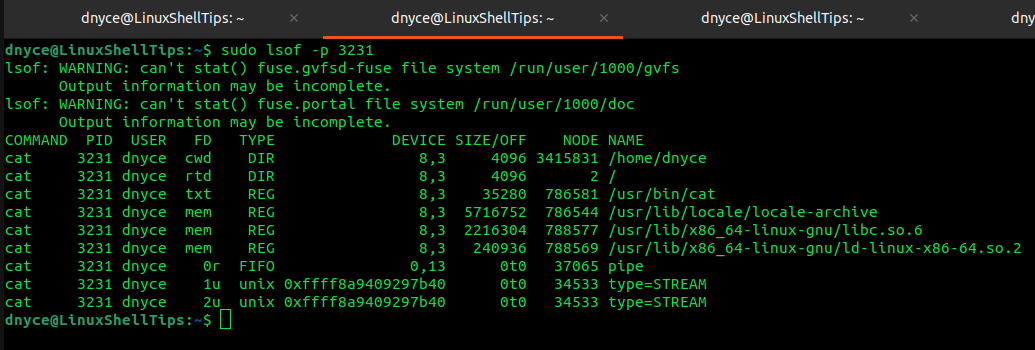

To check a process’s file descriptor usage, we first need to check the targeted process’s ID and then implement it in a lsof command. For instance, the per-process usage of the cat command’s file descriptors can be determined in the following manner:

First, run the ps aux command to determine the process id:

$ ps aux

Then implement the lsof command to determine the per-process file descriptors.

$ sudo lsof -p 3231

The NAME column points to the file descriptor’s exact file and the TYPE column points to the file type.

Check Linux File Descriptor Limits

It is the limit to the number of files a process is allowed to open at any given time. It can either be a soft limit or a hard limit. The soft limit is changeable (can only be lowered) by an unprivileged user but fully changeable by a privileged user. The hard limit is changeable by privileged users.

Per-Session Limit

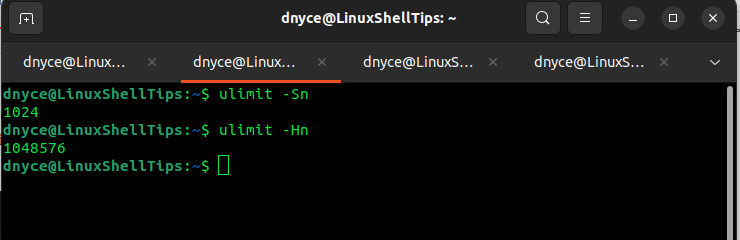

To check the soft limit, use the -Sn flag together with the ulimit command.

$ ulimit -Sn

To check the hard limit, use the -Hn flag together with the ulimit command.

$ ulimit -Hn

Per-Process Limit

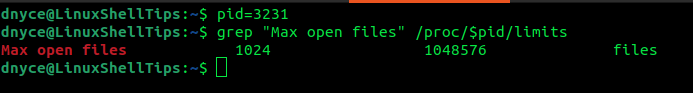

Here, we first retrieve a process’s PID and pass it as a variable in the procfs filesystem.

$ pid=3231 $ grep "Max open files" /proc/$pid/limits

The second and third columns point to the soft and hard limits respectfully.

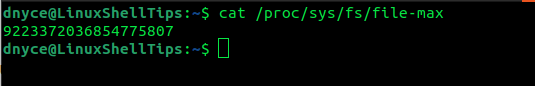

Global Limit

Determines the total system-wide file descriptors for all processes combined.

$ cat /proc/sys/fs/file-max

Fixing “Too Many Open Files” Error in Linux

Now that we have understood the role of file descriptors, we can fix the error “too many open files errors” by increasing the file descriptors limit in Linux.

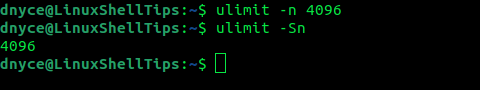

Temporarily (Per-Session)

This is a recommended approach. Here, we will use the ulimit -n command.

$ ulimit -n 4096 $ ulimit -Sn

We have changed the soft limit from 1024 to 4096.

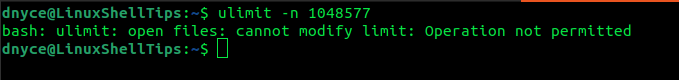

To change the soft limit to the hard limit value of 1048576 or more, we will need privileged user access.

$ ulimit -n 1048577

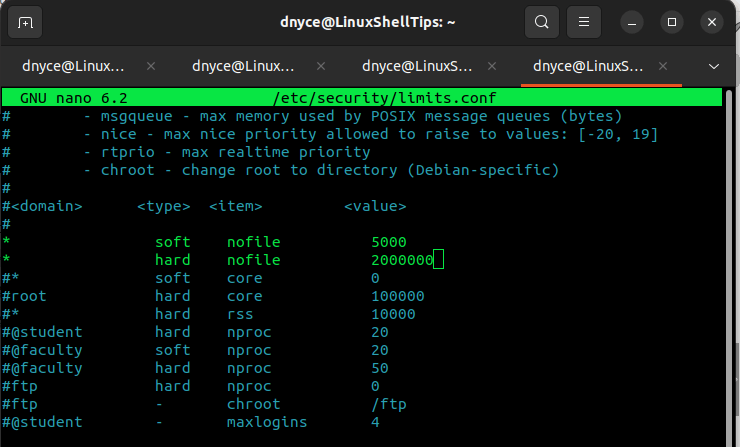

Increase Per-User Limit

Here, we need to first open the /etc/security/limits.conf file.

$ sudo nano /etc/security/limits.conf

In reference to the below screen capture, you can append an entry like:

<domain> <type> <item> <value> * soft nofile 5000 * hard nofile 2000000

It is also advisable to specify the <item> association with the file descriptor.

The changes above apply globally for all processes. You will need to re-logging or restart to your system for the changes to be effective.

Globally Increase Open File Limit

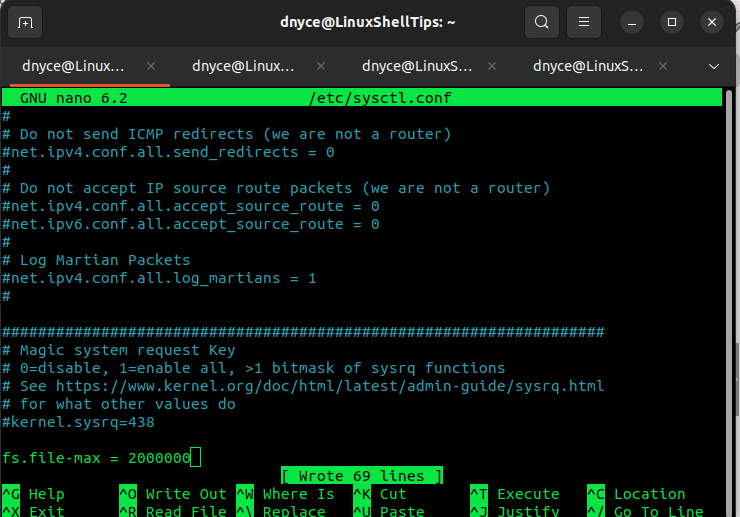

Open the /etc/sysctl.conf file.

$ sudo nano /etc/sysctl.conf

Append the following line with your desired file descriptor value.

fs.file-max = 2000000

Save the file and reload the configuration:

$ sudo sysctl -p

Restart your system or re-login.

We are now comfortable with handling the “Too many open files” error on our Linux systems.